Table of Links

3 End-to-End Adaptive Local Learning

3.1 Loss-Driven Mixture-of-Experts

3.2 Synchronized Learning via Adaptive Weight

4 Debiasing Experiments and 4.1 Experimental Setup

4.3 Ablation Study

4.4 Effect of the Adaptive Weight Module and 4.5 Hyper-parameter Study

6 Conclusion, Acknowledgements, and References

3.2 Synchronized Learning via Adaptive Weight

After addressing the discrepancy modeling problem, another core cause of the mainstream bias is the unsynchronized learning problem – the learning difficulties vary for different users, and users reach the performance peak during training at different speeds (refer to Figure 1 for an example). Thus, a method to synchronize the learning paces of users is desired. We devise an adaptive weight approach to achieve learning synchronization so that the dilemma of performance trade-off between mainstream users and niche users can be overcome.

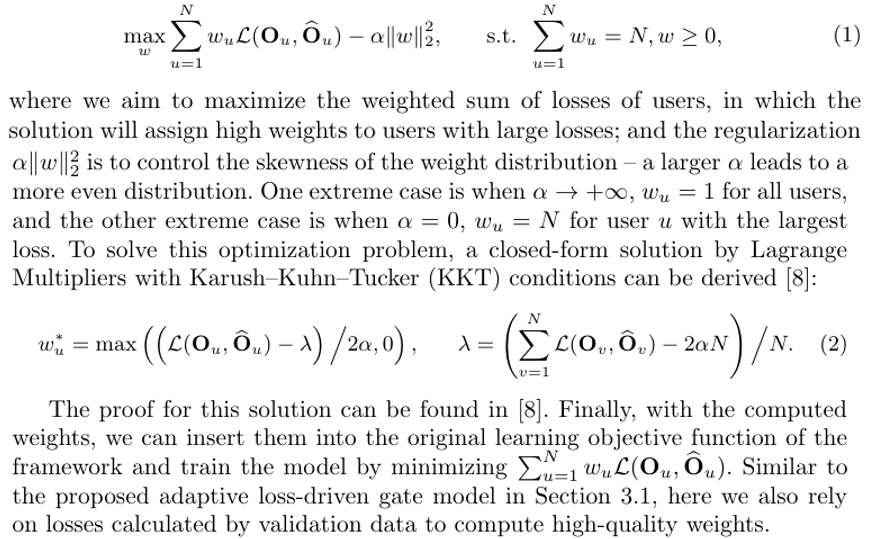

Adaptive Weight. The fundamental motivation of the proposed method lies in linking the learning status of a user to the loss function of the user at the current epoch. A high loss for a user signifies ineffective learning by the model, necessitating more epochs for accurate predictions. Conversely, a low loss indicates successful modeling, requiring less or even no further training. Moreover, we can use a weight in the loss function to control the learning pace for the user: a small weight induces slow updating, and a large weight incurs fast updating. Hence, we propose to synchronize different users by applying weights to the objective function based on losses users get currently – a user with a high loss should receive a large weight, and vice versa. We aim to achieve this intuition by solving the following optimization problem:

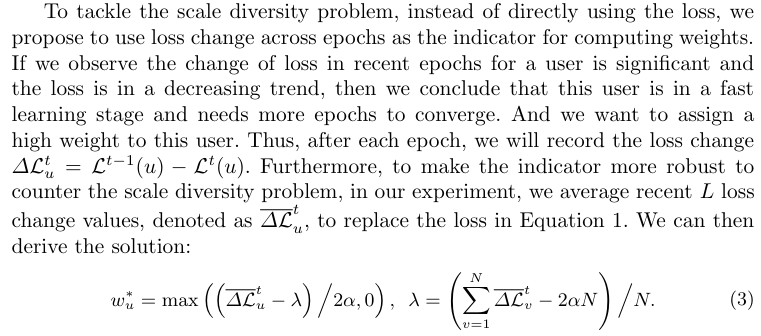

Loss Change Mechanism and Gap Mechanism. With the proposed adaptive weight approach, we take a solid step toward learning synchronization. However, two critical issues remain unaddressed. First, the scale of the loss function is innately different across different users. Usually, mainstream users possess a lower loss value than niche users because the algorithm can achieve better utility for mainstream users who are easier to model. Due to this scale diversity problem, computing weights by exact values of the loss can lead to the undesired situation that mainstream users get overly low weights and niche users get overly high weights, disturbing the learning process. On the other hand, the loss is not always stable, especially at the early stage of training. This unstable loss problem can deteriorate the efficacy of the problem adaptive weight method too.

At last, since the losses are excessively unstable at the initial stage of training and the proposed adaptive weight module heavily relies on the stability of the loss value, the proposed method cannot perform well at the initial stage of training. Hence, we propose to have a gap at the beginning for our adaptive weight method. That is, we do not apply the proposed adaptive weight method to the framework at the first T epochs. Since at the early stage of the training, all users will be at a fast learning status (consider the first 50 epochs in Figure 1), as there is no demand for learning synchronization. After T epochs of ordinary training, when the learning procedure is more stable and the loss is more reliable, we apply the adaptive weight method to synchronize the learning for different users. And this time synchronization is desired and plays an important role. The gap window T is a hyper-parameter and needs to be predefined.

Authors:

(1) Jinhao Pan [0009 −0006 −1574 −6376], Texas A&M University, College Station, TX, USA;

(2) Ziwei Zhu [0000 −0002 −3990 −4774], George Mason University, Fairfax, VA, USA;

(3) Jianling Wang [0000 −0001 −9916 −0976], Texas A&M University, College Station, TX, USA;

(4) Allen Lin [0000 −0003 −0980 −4323], Texas A&M University, College Station, TX, USA;

(5) James Caverlee [0000 −0001 −8350 −8528]. Texas A&M University, College Station, TX, USA.

This paper is