Formal Proof Systems Reveal Overlooked Ambiguities in Advanced Mathematics

11 Dec 2025

Formalisation is uncovering subtle gaps in modern mathematical proofs, from implicit conventions to non-canonical constructions that demand new rigor.

Canonical Isomorphisms In More Advanced Mathematics

11 Dec 2025

Canonical isomorphisms in advanced math aren’t as canonical as they seem. This article explores sign choices, Langlands theory, and why formalism falls short.

Reexamining Canonical Isomorphisms in Modern Algebraic Geometry

11 Dec 2025

A critical look at how mathematicians use the word “canonical,” revealing how informal shortcuts obscure the real constructions behind key theorems.

Universal Properties In Algebraic Geometry

10 Dec 2025

Universal properties promise abstraction, but localisation shows where they fail—especially in formal proofs. Learn why algebraic geometry needs concrete models

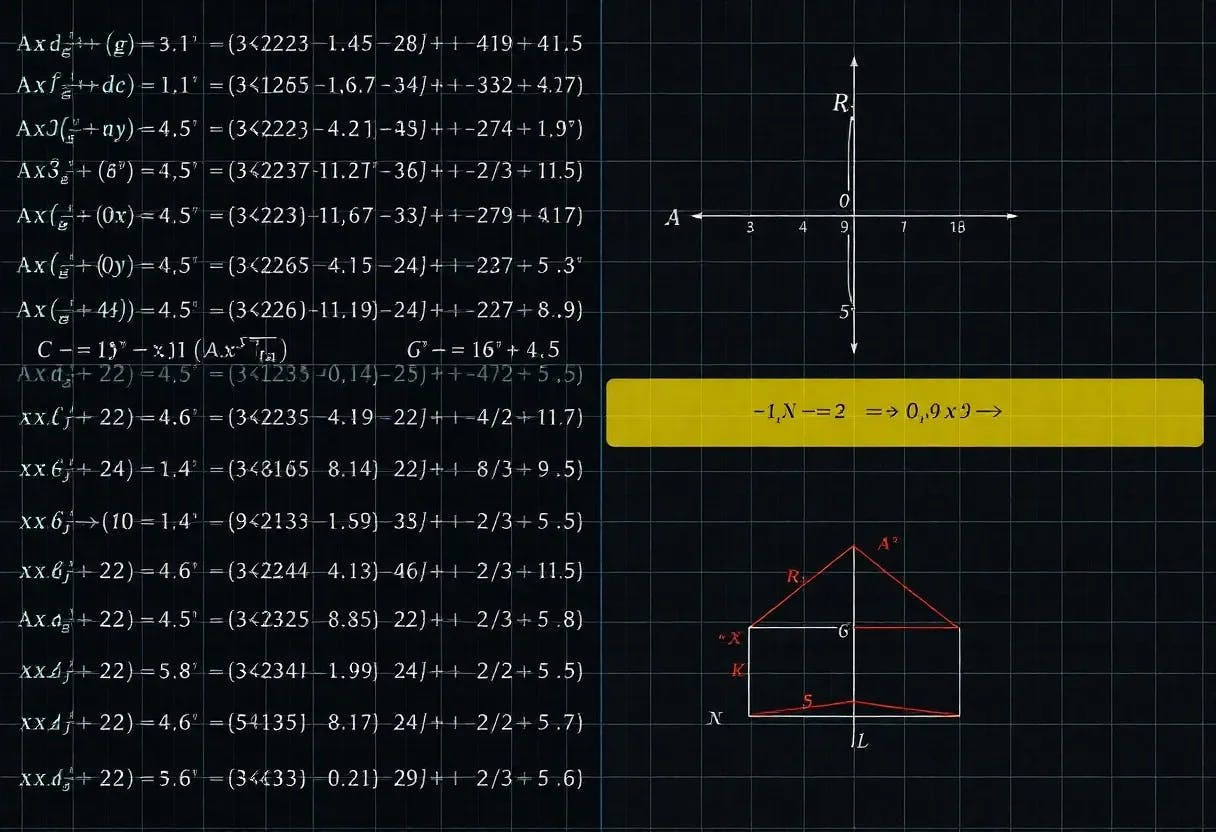

The Problem With Grothendieck’s Use Of Equality

10 Dec 2025

Grothendieck’s “canonical” equalities work on paper but clash with formal proof systems like Lean, exposing a subtle gap in algebraic geometry’s foundations.

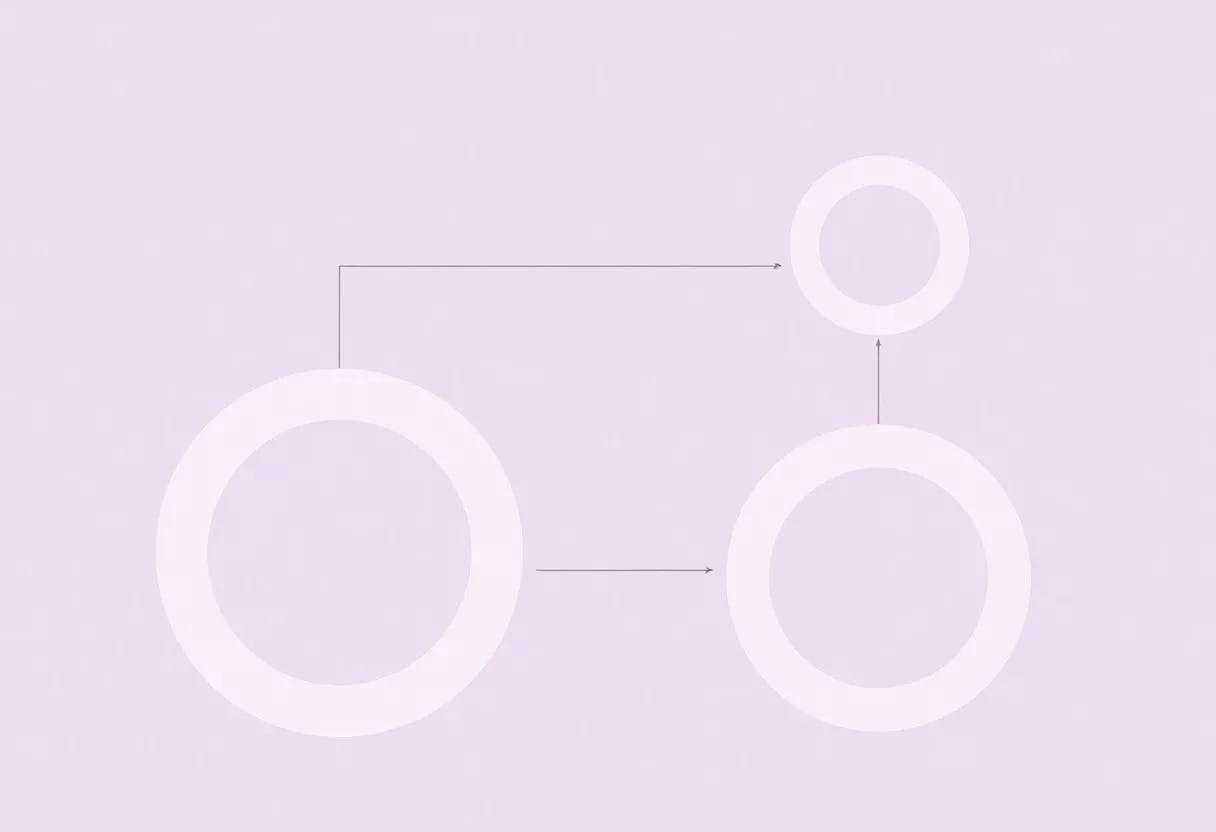

Category Theory Explains a Common Oversight in Everyday Mathematics, Study Finds

10 Dec 2025

Why common product notation hides deep structural issues in set theory, and how category theory resolves ambiguities mathematicians often overlook.

Inside the Logic of “Products” and Equality in Set Theory

10 Dec 2025

A clear, accessible explanation of universal properties, equality, and why mathematicians treat many different constructions as “the same” object.

Why Mathematicians Still Struggle to Define Equality in the Computer Age

10 Dec 2025

A mathematician explores why equality in mathematics resists formal definition, and how computer theorem provers expose gaps in our foundational intuition.

Grothendieck, Equality, and the Trouble with Formalising Mathematical Arguments

9 Dec 2025

How mathematicians use equality conflicts with formal proof systems. This article explores why canonical isomorphisms break down in computer-checked maths.