Table of Links

3. Theoretical Lenses

4. Applying the Theoretical Lenses and 4.1 Handoff Triggers: New tech, new threats, new hype

4.2. Handoff Components: Shifting experts, techniques, and data

4.3. Handoff Modes: Abstraction and constrained expertise

5. Uncovering the Stakes of the Handoff

5.1. Confidentiality is the tip of the iceberg

6.2 Lesson 2: Beware objects without experts

6.3 Lesson 3: Transparency and participation should center values and policy

8. Research Ethics and Social Impact

Acknowledgments and References

5 UNCOVERING THE STAKES OF THE HANDOFF

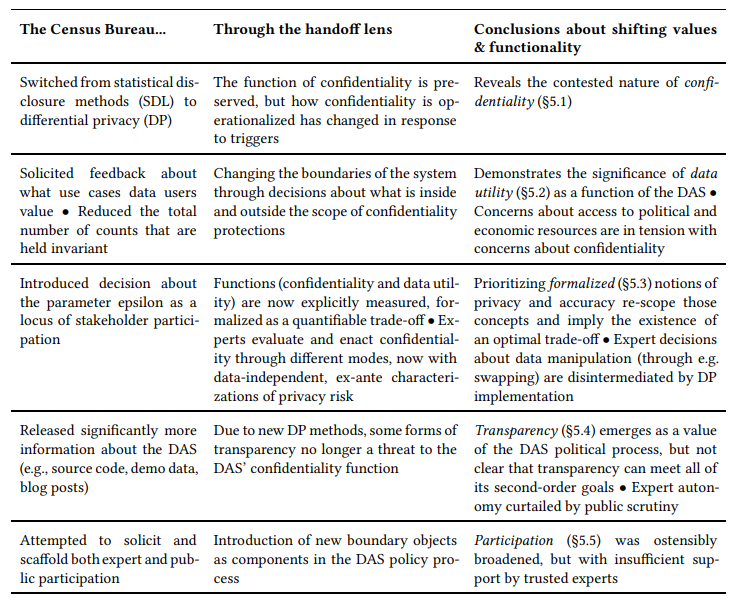

Applying the handoff model to our case as outlined in section 4, we surface several value-laden shifts in the Census Bureau’s adoption and implementation of DP, as well as the participatory processes that the Bureau introduced to negotiate this transition. We summarize these findings in Table 1.

5.1 Confidentiality is the tip of the iceberg

Switching from earlier SDL methods to DP, the DAS maintained the same function of protecting Census respondents’ confidentiality. The Bureau emphasized this change as a narrative of progress and increased effectiveness: the Bureau framed DP as a “modern” alternative to prior methods and “a new, advanced, and far more powerful confidentiality protection system” [5].

The handoff model allows us to look beyond this narrative of linear progress to understand the larger social and political implications of the new DAS. While the DAS’s function remained focused on confidentiality, examining the changing components and modes used to achieve this function reveals a more complicated story. First, the notion of confidentiality is itself contested. The Bureau’s decision to pursue confidentiality through disclosure avoidance is itself a value-laden choice, shaped by its interpretation of the confidentiality requirement outlined in Title 13. The handoff model demonstrates that changing one part of the DAS is not merely a modular replacement of one technical component with another. Instead, the adoption of DP changed the meaning of the system’s core confidentiality function by shifting what harms the DAS was designed to protect against. (Indeed, different conceptualizations of confidentiality led to significant conflict around the Bureau’s use of DP [92].) In particular, the turn to DP enables two distinct confidentiality functions: 1) empirical protections against external reconstruction of individual records and 2) because of DP’s emphasis on future-proof theoretical guarantees, plausible deniability for the Bureau against any future harms. The latter function is a shift from earlier versions of the DAS where these guarantees could not be rigorously formalized. This expansion of the confidentiality function aligns with the Bureau’s interpretation of the Title 13 confidentiality mandate, targeting worst-case risk and insulating the Bureau from both present and future legal liability. The choice to operationalize the DAS’s confidentiality function using DP is an important upstream policy decision but, because of the Bureau’s interpretation of their legal mandate, not one over which stakeholders outside of the Bureau had input. Moreover, beyond the contested meaning of confidentiality, the handoff lens reveals that confidentiality was far from the only value implicated by the shift to DP.

Authors:

(1) AMINA A. ABDU, University of Michigan, USA;

(2) LAUREN M. CHAMBERS, University of California, Berkeley, USA;

(3) DEIRDRE K. MULLIGAN, University of California, Berkeley, USA;

(4) ABIGAIL Z. JACOBS, University of Michigan, USA.

This paper is