Table of Links

3 End-to-End Adaptive Local Learning

3.1 Loss-Driven Mixture-of-Experts

3.2 Synchronized Learning via Adaptive Weight

4 Debiasing Experiments and 4.1 Experimental Setup

4.3 Ablation Study

4.4 Effect of the Adaptive Weight Module and 4.5 Hyper-parameter Study

6 Conclusion, Acknowledgements, and References

4.4 Effect of the Adaptive Weight Module

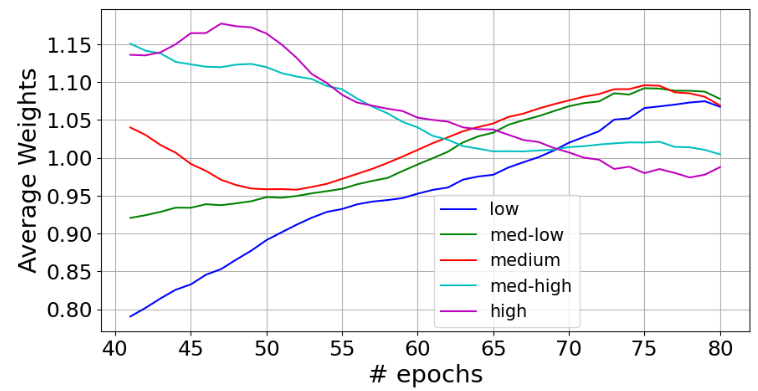

Last, we turn our attention to investigating the effect of the adaptive weight module, studying how it synchronizes the learning paces of different users. We run TALL on the ML1M dataset and present the average weights for the five subgroups with the gap window (#gap = 40) in Figure 3. It can be observed that the adaptive weight module assigns weights dynamically to different types of users to synchronize their learning paces. Initially, mainstream users receive higher weights because they are easier to learn and have a higher upper bound of performance than niche users. Then, when mainstream users reach the peak, the model switches the attention to niche users who are more difficult to learn, gradually increasing the weights for ‘low’, ‘med-low’, and ‘medium’ users until the end of the training procedure. However, ‘med-high’ and ‘high’ users, approaching converged, need a slower learning pace to avoid overfitting, leading to a decrease in the weights. Figure 3 illuminates the effectiveness and dynamic nature of the proposed adaptive weight module in synchronizing the learning procedures for different types of users.

4.5 Hyper-parameter Study

Additionally, we have also conducted a comprehensive hyper-parameter study investigating the impacts of three hyper-parameters in TALL: (1) the gap window in the adaptive weight module; (2) α in the adaptive weight module; and (3) the number of experts. The complete results are in https://github.com/JP-25/ end-To-end-Adaptive-Local-Leanring-TALL-/blob/main/Hyperparameter Study. pdf.

Authors:

(1) Jinhao Pan [0009 −0006 −1574 −6376], Texas A&M University, College Station, TX, USA;

(2) Ziwei Zhu [0000 −0002 −3990 −4774], George Mason University, Fairfax, VA, USA;

(3) Jianling Wang [0000 −0001 −9916 −0976], Texas A&M University, College Station, TX, USA;

(4) Allen Lin [0000 −0003 −0980 −4323], Texas A&M University, College Station, TX, USA;

(5) James Caverlee [0000 −0001 −8350 −8528]. Texas A&M University, College Station, TX, USA.

This paper is